AWS DLP, DSPM, and Data Discovery (DSPM): Securing Sensitive Data in AWS with Strac

TL;DR:

- AWS Data Security is Critical – Organizations store vast amounts of sensitive data in AWS services like S3, DynamoDB, RDS, and Redshift. Protecting this data from unauthorized access and leaks is essential for security and compliance.

- DLP & DSPM Explained – Data Loss Prevention (DLP) stops unauthorized data exposure, while Data Security Posture Management (DSPM) provides continuous visibility into where sensitive data resides, who has access, and how it’s protected.

- Challenges with AWS-Native Tools – Amazon Macie offers basic DLP for S3 but lacks coverage for other AWS services and doesn’t provide remediation.

- Amazon Macie vs. Strac – Macie only scans S3 and alerts on risks, while Strac offers comprehensive AWS DLP & DSPM with automated sensitive data discovery, real-time access monitoring, remediation actions (e.g., redaction, blocking, encryption), and broad integrations across SaaS, cloud, Gen AI, and endpoint security.

- Compliance & Risk Reduction – Strac helps organizations meet regulatory requirements (PCI, HIPAA, GDPR, SOC 2) by continuously discovering, classifying, securing, and monitoring sensitive data.

Organizations rely on Amazon Web Services (AWS) to store and process vast amounts of sensitive data. Ensuring this data is safe from leaks and breaches is critical for both security and compliance. In this post, we explore AWS Data Loss Prevention (DLP), Data Security Posture Management (DSPM), and Data Discovery – what they mean in the AWS cloud context, how they help protect data across AWS services (S3, DynamoDB, RDS, Redshift, etc.), and why a comprehensive solution like Strac is ideal for achieving robust AWS data security. We’ll also provide a detailed comparison of Amazon Macie (AWS’s native data discovery tool) vs. Strac to highlight their features and benefits.

✨ Understanding AWS Data Loss Prevention (DLP) and DSPM

Data Loss Prevention (DLP) refers to strategies and tools that prevent sensitive information from leaving an organization’s environment without authorization. In practice, DLP solutions monitor and control data at rest, in use, and in transit to stop unauthorized disclosure of sensitive information. Within AWS, DLP might involve scanning data stored in cloud services and intercepting potential data leaks (for example, preventing an employee from downloading a confidential file from an S3 bucket to an unapproved device). Effective DLP implements policies to detect and block the transmission or exposure of private data such as customer PII, financial records, or intellectual property.

Data Security Posture Management (DSPM) takes a broader approach to cloud data protection. DSPM is about continuously knowing what data you have, where it lives, how it’s secured, and who has access to it across all your cloud services. In other words, DSPM acts as a watchdog over your cloud data estate, helping you identify sensitive data, assess its security posture (encryption, access controls, exposure risks), monitor for vulnerabilities or policy violations, and ensure compliance with relevant standards. In AWS, DSPM solutions automatically discover sensitive data resources across the environment, evaluate their security settings, and alert on or remediate any risks. This holistic view is crucial in complex AWS deployments so that no database, storage bucket, or data stream containing sensitive information goes unnoticed or unsecured.

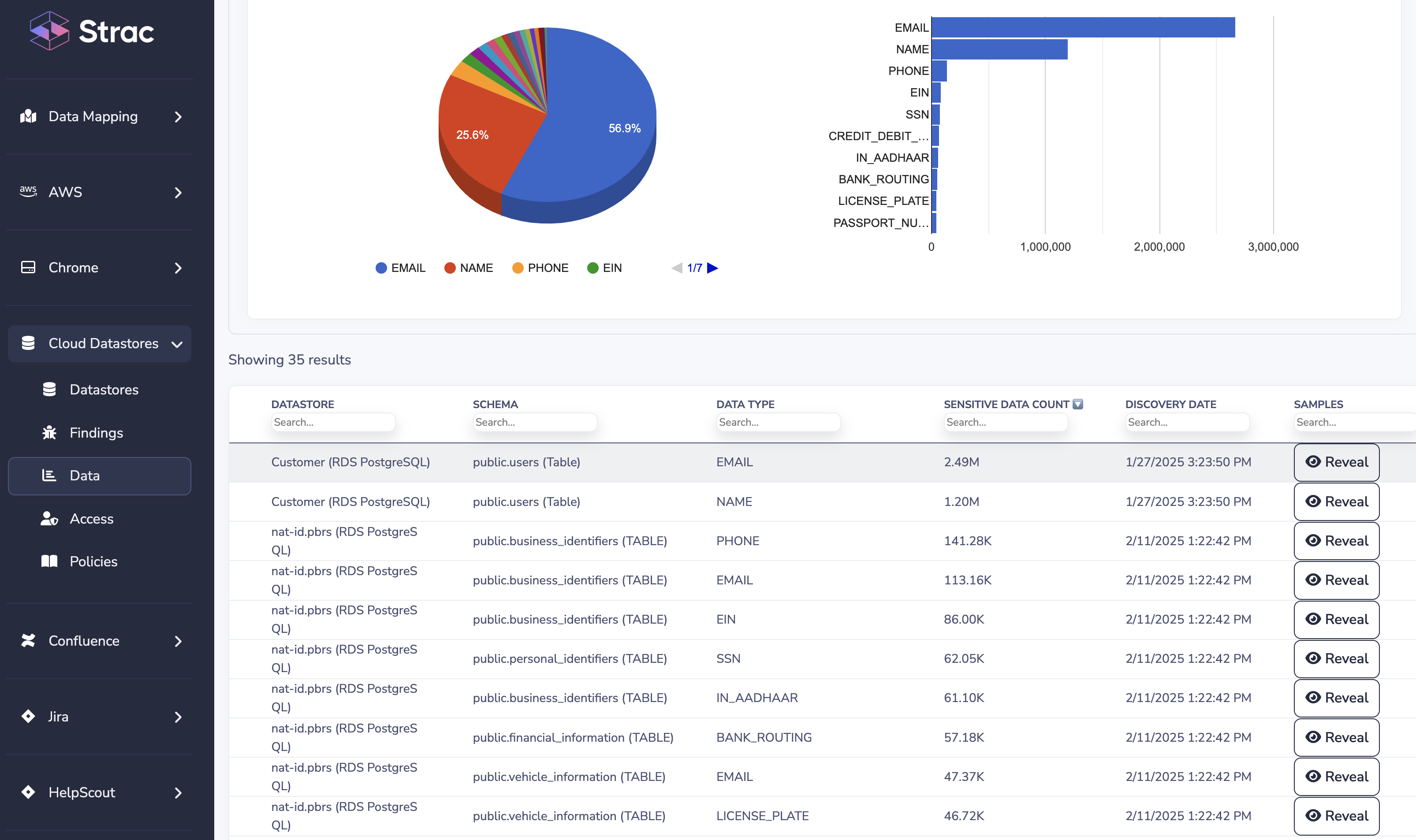

Data Discovery is the foundational element for both DLP and DSPM. Before you can protect data or manage its security posture, you must find and identify it. AWS environments often contain diverse data stores: files in Amazon S3 buckets, records in DynamoDB tables, relational data in RDS or Redshift, log files in CloudWatch or S3, etc. Data discovery involves scanning these structured and unstructured repositories to locate sensitive information such as Personally Identifiable Information (PII), Protected Health Information (PHI), financial data (like credit card numbers), secrets or API keys, and more. Modern solutions use machine learning and pattern matching to automatically classify discovered data by sensitivity (e.g., tagging a column in an RDS database as containing Social Security Numbers). This process establishes an inventory of sensitive data, which is vital for applying protections and tracking compliance. In fact, knowing where your sensitive data resides and who has access to it is vital, as many times sensitive data (like PHI) can inadvertently end up in places it shouldn’t be. Data discovery in AWS ensures there are no blind spots – you get full visibility into your data assets across all AWS services.

AWS DLP and DSPM for Data Stores (S3, DynamoDB, RDS, Redshift, etc.)

AWS offers a variety of data storage services, each of which can contain sensitive information that needs protection. A robust AWS data security strategy must span all these services:

- Amazon S3 (Simple Storage Service): A common source of data leaks when misconfigured, S3 stores everything from backups to data lakes. Sensitive files in S3 should be discovered and classified (for example, detecting files containing PII or credit card numbers) so that appropriate controls (encryption, access policies, or DLP rules) can be applied. Monitoring S3 access patterns is also important – unusual access or public exposure of a bucket can indicate a security risk.

- Amazon RDS (Relational Database Service): Many applications host their production databases on RDS (MySQL, PostgreSQL, SQL Server, etc.). These databases may contain customer profiles, health records, payment data, and other PII/PHI. Securing RDS involves classifying sensitive columns/tables, controlling who or what applications can query them, and auditing access. Data discovery tools can connect to RDS instances to scan for sensitive data patterns in tables (e.g. looking for columns that resemble Social Security Numbers or birth dates). Once identified, you can enforce stricter access controls or encryption on those data elements.

- Amazon DynamoDB: This NoSQL database can store unstructured or schemaless data, which might include JSON objects with sensitive fields. Automated discovery is valuable here to parse items in DynamoDB tables and flag any sensitive personal data. Continuous monitoring can then ensure that, for example, no unauthorized user or service is retrieving large amounts of that sensitive data.

- Amazon Redshift: As a data warehouse, Redshift often aggregates data from various sources (which may include sensitive information) for analytics. It’s critical to classify what sensitive data lands in Redshift. For instance, if customer PII is loaded for analytics, that should be identified and protected. Access to those datasets should be monitored and limited to necessary analysts. A DSPM solution can help map out where sensitive data flows into the warehouse and ensure it remains encrypted and accessed only by approved roles.

- AWS CloudWatch Logs and Other Services: Even log data can contain secrets or personal data (e.g., an application error log in CloudWatch that accidentally logs a credit card number or email address). AWS DLP tools should scan logs and other semi-structured data stores for leaks of sensitive info. If found, remediation could involve masking the data or adjusting the application to stop logging such information. Similarly, services like Amazon OpenSearch, Amazon SQS (queue messages), or Amazon EFS (file system) can all harbor sensitive data that requires discovery and protection.

Each AWS service has its own security capabilities (for example, S3 bucket policies/IAM roles, RDS encryption and parameter groups, etc.), but a unified data security posture is necessary. This means having an overarching view of sensitive data across all these services and consistently enforcing policies. Without centralized DLP/DSPM, security teams might miss, say, an S3 bucket full of personal data that was created outside of normal processes, or an old database backup stored in DynamoDB. By leveraging automated discovery and classification across S3, RDS, DynamoDB, Redshift, and more, organizations can break down data silos and apply uniform controls to all sensitive information in AWS.

✨ AWS S3 DLP & DSPM: Data Classification, Access Control, Monitoring, and Remediation

After discovering where sensitive data resides in AWS, organizations should implement data classification, access control, continuous monitoring, and remediation as key components of their data protection strategy:

- Data Classification: Classification means labeling data based on sensitivity or type (e.g., marking data as Public, Internal, Confidential, Regulated, etc.). In AWS, once data discovery scans identify sensitive content, each finding can be tagged with a classification (for example, a document in S3 might be tagged as containing PHI, or a database field classified as PCI data). Proper classification is crucial because it informs what security controls and handling procedures are needed. Solutions like Strac use machine learning to accurately categorize sensitive data, minimizing false positives. With an extensive catalog of sensitive data elements, Strac’s classification engine can recognize everything from phone numbers and health record IDs to API keys, ensuring no sensitive information goes unnoticed. See https://www.strac.io/blog/dspm-vs-dlp#strongdifferences-between-dspm-vs-dlpstrong

- Access Control: Strong access controls ensure that only authorized personnel or applications can access sensitive data. In AWS, this includes using IAM roles and policies, bucket policies, database user privileges, etc., configured according to the principle of least privilege. A DSPM solution assists by providing visibility into who has access to what data. For instance, Strac can show which users or roles have access to a sensitive S3 bucket or a particular database table. It enables defining role-based access control policies (e.g., only the HR team’s IAM role can access files classified as HR data) and can detect when access rights are too broad. By tightening permissions and removing excessive access, the risk of insider leaks or accidental exposure diminishes. Ongoing posture management means these access controls are regularly evaluated and updated as your AWS environment changes.

- Continuous Monitoring & Alerting: Once controls are in place, continuous monitoring is needed to catch any violations or anomalies in real time. This includes monitoring data access patterns (for example, a user suddenly downloading gigabytes of sensitive data from S3 or an application unexpectedly reading sensitive DynamoDB records after hours). AWS provides CloudTrail and CloudWatch events for tracking actions, but a DLP/DSPM solution like Strac correlates these events with knowledge of where sensitive data lives. It can generate alerts when sensitive data might be at risk – for example, if a normally private S3 bucket with PII becomes publicly accessible or if a credential leak leads to mass access of a sensitive RDS table. Monitoring also covers data in motion; for example, Strac’s endpoint and SaaS integrations can detect if someone tries to copy confidential data from an AWS storage into an unapproved chat or email. The goal is to detect incidents before they turn into full-blown breaches. According to industry best practices, DLP solutions watch data in use, in motion, and at rest to catch any “fishy” activity and stop leaks.

- Remediation: When a security incident or policy violation is detected, swift remediation is essential to mitigate damage. Remediation can take many forms in AWS: quarantining sensitive files (e.g., automatically removing or encrypting an S3 object that contains secrets), revoking access (e.g., disabling an IAM key that is accessing data it shouldn’t), or redacting sensitive information. Strac offers a range of remediation actions out-of-the-box. For instance, if it discovers credit card numbers in an S3 file that shouldn’t have them, it can automatically redact or mask those numbers. It can also block certain transfers (like preventing a download or external sharing), delete offending data, or simply send an alert, depending on policy. These measures ensure that even if sensitive data is found in an unsafe situation, it is promptly protected or removed. Additionally, Strac supports workflow integrations – for example, creating a ticket in an ITSM system or notifying security teams – to make sure the right people are aware and can follow up. By combining proactive controls with reactive remediation, organizations create a feedback loop: any incident leads to action, which prevents future incidents of a similar nature.

Compliance and Regulatory Considerations (PCI, HIPAA, GDPR, etc.)

Achieving strong data security in AWS isn’t just a best practice – it’s often a requirement to comply with various data protection regulations and industry standards. Here’s how AWS DLP/DSPM and data discovery tie into compliance:

- PCI DSS (Payment Card Industry Data Security Standard): If you store or process payment card information in AWS, PCI DSS compliance is mandatory. PCI DSS explicitly requires organizations to know where cardholder data is located and to protect it. In fact, discovering and identifying all stored cardholder data (especially any unencrypted data) is mandatory under Requirement 3.1 of the PCI DSS standard. Data discovery tools help organizations scan AWS data stores to find any credit card numbers that might be in logs, backups, or databases. Once found, the data can be encrypted or purged if not needed, as PCI DSS also mandates minimal retention. Classification and DLP controls ensure that card data is not emailed out, uploaded to unapproved locations, or otherwise leaked. By using Strac’s platform to continuously discover and secure cardholder data, organizations can more easily meet PCI requirements related to restricting data access, encryption, monitoring, and breach prevention.

- HIPAA (Health Insurance Portability and Accountability Act): Companies managing healthcare data or PHI in AWS must adhere to HIPAA’s Security Rule and Privacy Rule. A core requirement of HIPAA is to implement safeguards that ensure the confidentiality and integrity of PHI. This is practically impossible without knowing where PHI resides. Often, PHI can be scattered across different systems – a patient record might be stored in an RDS database, while insurance documents are stored in S3. As noted, many times PHI can inadvertently end up in places it shouldn’t be, so having full visibility into what PHI you have, where it’s stored, and who can access it is vital. Data discovery and classification address this need by inventorying all PHI across AWS services. Once identified, Strac can help enforce encryption (at rest and in transit), access controls (only authorized healthcare personnel can see it), and auditing (tracking every access to PHI data). In case of any unauthorized access or leak, HIPAA’s Breach Notification Rule requires reporting incidents to affected individuals and regulators. Strac’s continuous monitoring and alerts assist in catching such incidents early, potentially limiting their scope. Additionally, by redacting or tokenizing PHI in non-production environments (like dev/test S3 buckets or logs), organizations can prevent avoidable exposures and thus maintain compliance.

- GDPR (General Data Protection Regulation): GDPR applies to personal data of EU citizens, regardless of whether your AWS environment is in the EU or elsewhere. One of the first steps toward GDPR compliance is maintaining a personal data inventory – essentially knowing all the personal data your organization holds, where it’s stored, and how it’s used. This aligns perfectly with the goals of data discovery and DSPM. An AWS setup might hold personal data in many forms (user account info in DynamoDB, uploaded documents in S3, analytics data in Redshift, etc.). Strac’s discovery capabilities help create and maintain this inventory by identifying personal data across AWS. Moreover, GDPR emphasizes principles like data minimization and storage limitation (only keep data as long as necessary). With a clear view of your data, you can enforce retention policies (for example, flagging files that exceed retention or contain data that should have been deleted). GDPR also grants rights to individuals such as the right to access their data or request deletion (Right to be Forgotten). Fulfilling these requests is much easier when you have a searchable index of where an individual’s data might reside – something Strac can facilitate through its indexing of discovered data. Finally, GDPR requires robust protection of personal data (integrity and confidentiality). The combination of DLP (to prevent leaks) and DSPM (to continuously check security posture) in AWS helps demonstrate that you are taking appropriate measures to safeguard EU personal data, thus meeting GDPR’s security obligations.

- Other Regulations (CCPA, SOC 2, etc.): Beyond the big three above, many other laws and frameworks require similar data security diligence. For instance, CCPA (California Consumer Privacy Act) mandates knowing and protecting consumers’ personal data, and allows consumers to opt-out of data sale or request deletion – again necessitating a comprehensive data inventory and control. SOC 2 and ISO 27001 require strong data security controls as part of their trust principles. No matter the specific framework, the common thread is that you must identify sensitive data and guard it. Implementing thorough AWS DLP/DSPM with Strac not only improves security but also generates the evidence (reports, logs, classifications, policies) to show auditors and stakeholders that sensitive data (be it customer PII, financial info, or intellectual property) is properly discovered, monitored, and protected in line with compliance requirements.

In summary, a well-implemented data discovery and protection program in AWS serves as a backbone for compliance. It gives you confidence that you’re not overlooking sensitive data and helps you enforce the controls that these regulations demand.

✨ Why Strac is the Ideal Solution for AWS DLP & DSPM

When it comes to securing sensitive data in AWS, Strac provides a comprehensive platform that covers the full spectrum of DLP and DSPM needs. Here’s why Strac stands out as an ideal solution for AWS data security:

- Holistic Sensitive Data Discovery: Strac automatically scans data across your AWS environment (and beyond) to find sensitive information wherever it lives. It leverages cutting-edge machine learning and OCR techniques to inspect both unstructured data (like documents in S3, text in support ticket systems) and structured data (database records, spreadsheets, etc.). This automated discovery runs continuously, so as new data gets created or ingested in AWS (say a new S3 bucket or a new DynamoDB table), Strac will detect and classify any sensitive content. By building a real-time inventory of PII, PHI, PCI, and other confidential data, Strac ensures you always have up-to-date visibility.

- Accurate Classification and Policy Enforcement: One of Strac’s core strengths is its highly accurate data classification engine. It uses an extensive library of data patterns (for things like credit card numbers, SSNs, addresses, health record identifiers, etc.) combined with contextual machine learning to reduce false positives. This means you can trust Strac’s labels on what is sensitive and what category it falls into. With these classifications, you can easily apply fine-grained security policies. For example, you could set a policy that any document classified as “Confidential - Customer Data” in S3 must be encrypted and not shared publicly, and Strac will automatically check and enforce that. Strac also allows custom policies to meet your organization’s unique needs, and it integrates with AWS native tagging so that classifications can tie into AWS resource tags if needed.

- Comprehensive Access Control and Monitoring: Strac provides deep insight into data access patterns and permissions. It not only shows who currently has access to each sensitive data source (e.g., which IAM roles can read a given S3 bucket of sensitive data) but also monitors how that access is used. Through continuous monitoring of AWS CloudTrail events, CloudWatch logs, and direct integrations, Strac can detect anomalies like a user accessing data they never accessed before or a sudden spike in data retrieval from a classified resource. By correlating identity and data, Strac helps enforce least privilege – you can easily spot and revoke unnecessary access. It can also integrate with AWS Security Hub and other SIEMs to feed its findings into your broader security operations. This level of access visibility and monitoring is a key part of DSPM, ensuring your AWS data security posture remains tight.

- Effective and Automated Remediation: Unlike tools that only alert you to problems, Strac enables you to fix them in real time. It offers a range of remediation actions that can be automated. Some examples: Strac can automatically redact sensitive fields in a document or message (useful for things like removing PII from support tickets or logs), it can mask or encrypt data in situ (for instance, replacing a plaintext credit card number in a database with a token or ciphertext), and it can block data egress (such as preventing a file download or external sharing if it contains restricted data). In AWS, Strac can remediate misconfigurations too – if an S3 bucket with sensitive data is found to be public, Strac can trigger a policy to tighten the ACL or notify an admin to do so. Remediation workflows can be customized: you might choose to auto-delete files that violate certain policies or quarantine them to a secure vault for review. By integrating with AWS Lambda or other automation, Strac’s alerts can also trigger custom scripts (for example, to disable a compromised IAM credential). This breadth of remediation ensures that simply detecting sensitive data is not where the process ends; Strac helps you close the loop by neutralizing threats and compliance issues quickly. Checkout all supported remediation techniques by Strac.

- Integrations Across SaaS, Cloud, Gen AI, and Endpoints: A key advantage of Strac is that its protection isn’t limited to AWS alone. Most organizations have a hybrid environment – some sensitive data might reside in AWS, but other data might live in SaaS applications (like Google Workspace, Office 365, Slack, Salesforce), be processed by AI services, or be stored on user endpoints. Strac offers deep integrations across all these domains. This means you can use Strac as a one-stop solution to discover and protect data not just in AWS storage and databases, but also in SaaS apps (e.g., scanning Slack messages or attachments for PII, monitoring Google Drive or OneDrive for sensitive files), in Generative AI platforms (e.g., preventing sensitive data from being pasted into ChatGPT or scanning outputs for leaks), and on endpoints like employee laptops (e.g., detecting if a user tries to copy a sensitive AWS report to a USB drive). Strac’s integration catalog is extensive – covering popular databases and data warehouses (Snowflake, Postgres, etc.), collaboration tools (Slack, Teams, Zoom), customer support systems (Zendesk, Front, Intercom), cloud providers (AWS, Azure), and operating systems (Windows, Mac, Linux). This breadth means that sensitive data discovered in AWS can be tracked and protected even as it flows to other environments. For example, if an AWS-hosted database export is downloaded and then emailed via Gmail, Strac can catch and redact that in the email too. Such end-to-end coverage is critical in modern workflows where data often moves beyond cloud boundaries. By integrating all these channels, Strac ensures consistent DLP policies are enforced everywhere, greatly reducing the chance of an unmonitored data leak.

- Regulatory Compliance and Reporting: Strac was built with compliance in mind. The platform provides out-of-the-box templates and rules tailored to regulations like GDPR, HIPAA, PCI DSS, SOC 2, CCPA, and more. This makes it easier to configure policies that meet specific legal requirements (for example, a HIPAA policy pack that looks for PHI and restricts its sharing). Strac also maintains detailed logs and audit trails of all discovery scans, classification decisions, policy alerts, and remediation actions. When it’s time for an audit or security assessment, you can generate reports showing your data inventory, where sensitive data is located, and evidence of how it’s protected (encryption status, access lists, etc.). These reports can demonstrate compliance with requirements such as GDPR’s Article 30 (records of processing activities) or PCI’s quarterly scan mandates. In short, Strac not only helps you comply with data protection regulations by enforcing the necessary controls, it also gives you the documentation and dashboards to prove it.

- Ease of Deployment and Use: Deploying Strac in an AWS environment is straightforward. It is an agentless solution – meaning you typically do not need to install heavy software on your servers or endpoints to use it. Strac connects via secure APIs and AWS integrations. For example, read-only access to S3 or databases can be granted for scanning purposes. Many integrations can be set up in under 10 minutes via OAuth or access keys. Once connected, Strac’s cloud platform begins scanning and you can manage everything through a unified dashboard. This simplicity is a big win for lean cloud security teams. Instead of juggling separate tools for each type of service (one for S3 DLP, another for endpoint DLP, etc.), Strac provides a centralized console to define policies and view incidents across all channels. The result is lower operational overhead and a more cohesive data security strategy. Additionally, Strac’s platform is built to scale with your AWS usage – whether you have tens or thousands of S3 buckets and databases, it can continuously monitor all without significant performance impact on your environment.

In essence, Strac combines the strengths of DSPM (visibility, posture management, risk monitoring) and DLP (policy enforcement, leak prevention, remediation) into a single solution tailored for modern cloud-centric organizations. For companies heavily invested in AWS, Strac offers peace of mind that all sensitive data in AWS is discovered, secured, and compliant – and it extends that protection to the rest of your data ecosystem as well.The next section provides a side-by-side comparison of Amazon Macie vs. Strac to illustrate these points in more detail.

Amazon Macie vs. Strac: Feature Comparison

Amazon Macie is an AWS service focused on sensitive data discovery in the cloud, and Strac is a comprehensive data security platform. Both can play a role in AWS data protection, but they differ significantly in scope and capabilities. The table below compares key features and benefits of Amazon Macie and Strac:

Analysis: Amazon Macie is a powerful tool within its narrow focus – it provides an AWS-native way to find sensitive data in S3 and alert on risks. For organizations that primarily need to secure S3 buckets and want a fully AWS-managed experience, Macie is a suitable starting point. It uses machine learning to reduce manual effort in discovering PII and can integrate with AWS workflows. However, Macie’s limitations become apparent in any broader data security program: it doesn’t cover databases like RDS/DynamoDB, ignores any data outside of AWS, and won’t remediate issues for you. This is where Strac shines. Strac delivers a far more comprehensive solution that not only covers S3 but also every other place your sensitive data might live (cloud or on-prem or SaaS), and it takes action to protect that data.

In scenarios where an enterprise has diverse data repositories and stringent compliance requirements, Strac acts as a one-stop platform to manage it all. You get the convenience of a unified view and consistent policies across AWS and other systems, rather than siloed tools for each. Additionally, Strac’s active prevention capabilities mean it can stop data leaks in real time, which is beyond Macie’s scope (Macie’s alerts still rely on your team to intervene).

For AWS-heavy environments, it’s worth noting that Strac can integrate with AWS just as seamlessly – connecting via AWS APIs, feeding alerts to CloudWatch or Security Hub if desired – so you can maintain a cloud-native feel while extending protection. Ultimately, organizations aiming for robust cloud data security posture management and loss prevention will often find that Macie addresses only a subset of their needs, whereas Strac provides a comprehensive, defense-in-depth approach.

Conclusion

Data is the lifeblood of modern businesses, and nowhere is that more evident than in AWS cloud deployments where vast amounts of sensitive information are stored and processed. Ensuring this data is secure requires a combination of vigilant discovery, proactive monitoring, strict access control, and rapid remediation – the pillars of DLP and DSPM. AWS offers baseline tools (like Macie for S3) to help, but truly effective data protection often demands a unified solution that can cover all bases.

Strac emerges as an ideal solution for organizations looking to strengthen their AWS data security posture. By combining automated data discovery, accurate classification, and powerful remediation across AWS and beyond, Strac enables businesses to protect what matters most: their sensitive data and the trust of their customers and stakeholders. The platform’s business-friendly dashboards and reports keep stakeholders informed, while its technical depth meets the needs of security engineers and compliance auditors alike.

In short, if you’re operating in AWS and concerned about data leaks, misconfigurations, or compliance gaps, deploying a solution like Strac can drastically reduce your risk. It provides peace of mind that whether your sensitive data is in an S3 bucket, an RDS database, a Slack channel, or a team member’s laptop, you have the tools in place to discover it, secure it, monitor it, and keep it compliant at all times. In the evolving landscape of cloud data threats and regulations, such an integrated approach to AWS DLP and DSPM is not just advantageous – it’s essential for robust cloud security.

.webp)

.webp)

.avif)